Large Language Models vs Symbolic AI

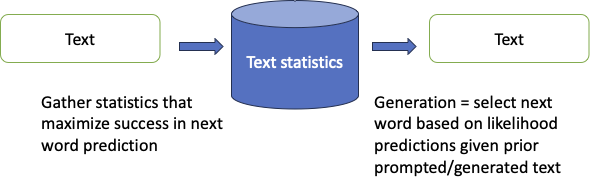

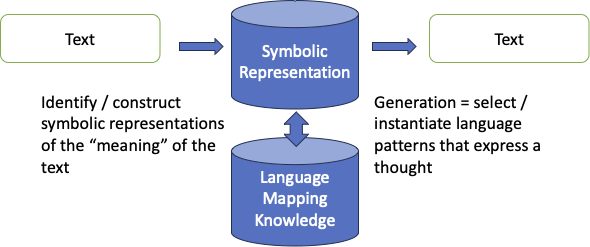

| LLMs | Symbolic AI |

|---|---|

|

|

|

|

Do we still need symbolic AI?

- It depends.

- What was your goal?

-

To build intelligent machines?

- Are LLMs a significant advance in building intelligent machines?

- Are we done?

-

To model human intelligence and learning?

- Are LLMs a plausible model of human cognition and learning?

- Do they answer questions about how we think?

Hinton's View

Some claims

| Claim | Questions |

|---|---|

| The human brain works like LLMs do. | Do humans learn like LLMs do? |

| Neural networks are more biological than symbolic AI. | Should/must AI research emulate evolution, i.e., neurons before reasoning? Does thought require neurons? |

One possible compromise

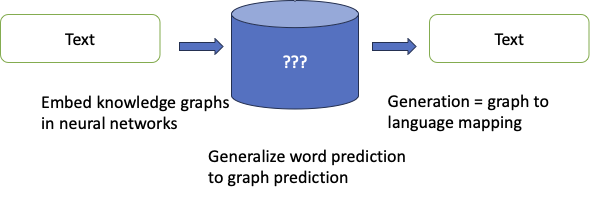

Neuro-symbolic AI

- Scalable, robust, predictable

- Language-independent, multi-modal

- Human-level training requirements

Readings

- Neurosymbolic AI for Reasoning over Knowledge Graphs: A Survey

- Knowledge Graphs -- as inputs to or outputs of ML programs

- A Gentle Introduction to Graph Neural Networks -- implementing graphs in networks

- Manling Li's work on Event Schema Induction

- Yeijin Choi's work on commonsense inferencing