Shu Ha Ri

Though there are many development practices associated with agile, like standups, backlogs, iterations, and so on, there is no set of practices you must do to be agile. The last paragraph of The Agile Samurai says it perfectly:

So, don't get hung up on the practices. Take what you can from this book, and make it fit your unique situation and context. And whenever you are wondering whether you are doing things the "agile way," instead ask yourself two questions:

- Are we delivering something of value every week?

- Are we striving to continuously improve?

If you can answer yes to both those questions, you're being agile.

But this doesn't mean anything goes. You only get to being able to answer yes to those questions through constant improvement.

There is a concept in learning agile, inspired by martial arts, called Shu Ha Ri: imitate / adapt / innovate.

- Shu: Imitate. Do the practices given, until you understand them. At first, many won't work because you don't know how to do them well. Repeat until the practices become second nature.

- Ha:Adapt. When you understand the causalities of the practices and you have trustworthy measures of metrics that matter, identify areas for improvement. Try some common alternative agile practice that might help. Measure. Repeat.

- Ri: Innovate. Create new practices.

Every team and every organization is different. What works for students in a 10-week class will differ from what works for full-time developers in a company with a dedicated workspace, and will differ yet again for developers working remotely in geographically distributed virtual team.

The idea is to master a good starting set of practices, then gradually evolve better ones.

Coding Practices

The following practices have been found to work well for students in this class. Some take several weeks to become natural. Many are probably very different from anything you've done before. That's because many common beliefs about development are wrong.

Swarming

- Swarm

- Code as a team two to three times a week, several days apart.

- If a team can't find at least two common times, find three or four with large overlapping subgroups such that everyone can come to at least two meetings per week.

- The entire team works together to kick off anything that's brand new and big.

- All design decisions -- database organization, UI look and feel, library selection -- should be made by the whole team.

- Swarm for 90 minutes to 2 hours.

- Start by defining the coding goals to accomplish for this session.

- Form subgroups as appropriate to do those tasks.

- Two people for simple well-understood tasks.

- Three or four for tasks that have complicated logic or are doing something unfamiliar.

- Finish the swarm with a mood chart update, as described below.

Rotate coding frequently

- Rotate who is at the keyboard every 15 minutes.

- That may seem short, but half an hour is forever to wait from the viewpoint of the third person in line.

- Use an audible timer alarm to signal when to rotate.

- When rotating,

- the current coder commits and pushes the code to the branch

- the next coder pulls the branch to their machine

- Create a rotation order at the start of the swarm.

- Start with the team members who have coded the least so far.

- Create a feature branch off main and begins entering code.

- Decide as a team what the person at the keyboard is.

- When the task is complete, merge the branch to main and delete it.

- When the subgroup hits a problem, they speak up/Slack/text/email/call other team members for help.

Maximize learning

- Choose team members to work on a task who are least familiar with the code to be changed.

- Choose team members to work together who have not worked together recently. (See the dashboard collaboration section below.)

- When creating code rotations, start with those who have coded the least so far.

Minimize work in progress, maximize value first

- Keep a shared prioritized backlog of user stories.

- Team decides what form, e.g., Trello board, a Github project, a spreadsheet, etc.

- Stories on the backlog are in priority order, most important first.

- Finish one story before beginning another.

- Pull, don't push.

Review and Improve

When someone asks an agile discussion group what practices are required by agile, the answer usually comes down to just one: retrospectives. Retrospectives are when agile teams review what is and isn't working and try changes to how they work to improve things.

Agile retrospectives are often done at the end of each iteration, but can be more often. A standard exercise is start-stop-continue, but there are many other ways to run a retrospective.

Unfortunately, retrospectives often end creating a list of things to fix, week after week, with no change. Therefore, a better approach is small experiments: pick one problem, make one very small change to how the team works, define how you will concretely measure if the change helped or hurt. At the next retrospective, you look at the results and decide whether a new change is needed or you can focus on another problem. Problems should be easy to measure, changes should be specific and easy to try doing, like this:

- Problem: some people got very little time to code because coders keep going over their rotation time

- Change: the next coder in line calls out when there's 2 minutes left

- Measure: coding time for everyone in a swarm

Metrics and Dashboard

Think of metrics as unit tests for the team. A unit test for code won't prove the code is correct but it can detect when something's wrong. A good metric does the same. For example, commit history is not a good way to evaluate contribution, but when someone has few or no commits, you've detected there's some issue in coding or recordkeeping that needs fixing.

Maintain a team dashboard of the metrics that matter, including coding, morale, learning, collaboration, and communication.

Display the data in a way that's easy to glance at, easy to update, and signals issues when they're small and easy to address.

Here's an example dashboard in a spreadsheet. It shows one metric that is improving but not yet where the team wants to be, and another that is still OK but has dropped this week.

| Metric | Target value | This week | Last week | Notes |

| #zeros on gitstats | 0 | 1 | 2 | |

| velocity | 8 | 8 | 10 |

Example data sources:

- Gitstats and other commit history information

- Mood charts

- Subgroup history and collaboration analysis

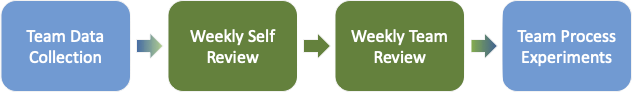

Self and Team Review

Just as the team should be reflecting on how it is doing every week, every team member should be reflecting personally as well. What do you think you have contributed to the product and to the team in the past week? What obstacles are preventing you from doing and learning more? What do you think you have learned. What do your teammates think you have contributed?

To foster this reflection, the class has weekly self and team reviews. The inputs to these reviews are both previous reviews and the metrics the team is tracking.

This alternative to the CATME peer review tool, used in previous classes, is designed to encourage frequent personal and team level reflections that:

- focus as much on team development as application development,

- use data and prior reflections to reveal trends, support experiments, and justify fairer peer evaluations.

Data collection and analysis

Two key things about data: don't ignore it, don't worship it.

The goal is to develop team habits that accumulate small amounts of the right data to detect problems early when they're easier to fix.

- Data can detect when there's a serious imbalance in coding contributions

- if the team's processes leave a digital trail on who did what.

- A simple spreadsheet can track and reveal growing issues in team morale

- if the team has a mood check-in process that is frequent and in the moment.

- A simple list of who has worked with whom can guide future partnering choices

- if the team keeps partnering notes.

Key areas to collect data on are contribution, communication, collaboration, and morale. These metrics align with the SPACE framework for tracking developer productivity:

- Guide to SPACE framework and metrics for developer productivity (13-minute read)

- The SPACE of Developer Productivity: There's more to it than you think. (30-minute read)

- An in-depth article by the authors of the SPACE framework.

Self reflection

We are terrible at remembering most of what happened even just a week ago. We forget how we felt about it at the time. Hence, briefly and weekly, self review is when you reflect and record

- your engagement with the team and your contributions to the codebase for the past week,

- how that compares, positively and negatively, to the week before, and

- one or two personal goals for the coming week.

How to do self review

Open your team's Data Viewer sheet.

- Read your prior self reviews on the Previous Self Responses tab for personal themes, goals, and accomplishments.

- Scan the tabular summary of the team reviews on the Ratings page for trends in your engagement with the team.

- Look at recent data collected by the team, such as code contributions via the Commit Analyzer, coding partners, swarm attendance, and so on.

Fill in the Self Review form. There's a link on the Config page of the Data Viewer.

Be brief, specific, and frank about accomplishments and obstacles. Connect to the data, when possible.

Team review and evaluation

This is the hard part. No one likes to give someone a low score. Unfortunately, what often ends up happening is that everyone says everyone is doing fine, week after week, evem though some team members have concerns. These concerns accumulate, until several team members suddenly give a team member low scores, usually on the last evaluation, when it's too late to do anything about it. This is unfair.

So in this class, the team review process:

- Uses frequent (weekly) low-stakes evaluations, rather than one or two high-stakes evaluations, so that it is easier to be honest earlier.

- Encourages the use of team-collected objective data, to provide a fair basis for evaluations.

- Includes personal reflections so that team members can be aware of each other's accomplishments and obstacles.

- Focuses on goals for the coming week as much as accomplishments of the previous week.

How to review your teammates:

- Read the self reviews from your teammates.

- Review the team's data. There's more than you think:

- Team communications (Slack, text, commit messages, ...)

- Contribution data (commits, gitstats, Trello updates, ...)

- Collaboration data (who worked with whom)

- Morale data (mood chart history)

Then comment on each team member. For each teammate, find something specific to say.

- Praise for contributing to the team (mentoring, reminding people of due dates) or the app (coding, debugging)

- Things you'd like to see, like more time with the team in swarms or class, more code testing before pushing to main,...

- If you haven't interacted in the past week with some teammates, make a note of that! That's important to track.

Use the checkboxes to summarize how you think things went for each teammate this week.

At the top of the team review form, suggest one or two things you think the team is doing well and something you think the team should try doing.